Cluster k8s Pi

- 1. Présentation de l'activité

- 2. Récupération de la dernière image Hypriot OS

- 3. Construction des images

- 4. Master node

- 4.3. Fichier de service kubelet.service.d/10-kubeadm.conf

- 5. Worker nodes

- 6. Réseau Flannel

- 7. Dashboard

- 8. Désinstallation du control plane.

- 9. Liens

1. Présentation de l'activité

Cette activité consiste à monter un cluster k8s en plateforme ARM avec un noeud master et quatre noeuds worker. Déjà équipé, le temps de déploiement prend plus ou moins une heure.

Logiciel

Hypriot OS est une distribution spécialisée pour approvisionner des plateformes ARM (dont la plus populaire le Raspberry Pi) pour Docker. Elle est dérivée Raspbian et l'image supporte nativement cloud-init. Un utilitaire "flash" du même projet facilite la gravure de l'image sur la carte SD.

Le cluster est déployé grâce à kubeadm qui permet de déployer un PoC de cluster k8s.

Versions

- Hypriot: 10.0

- Linux Kernel: 4.14.98

- Docker: 18.06.3-ce

- Kubernetes: 1.14.1

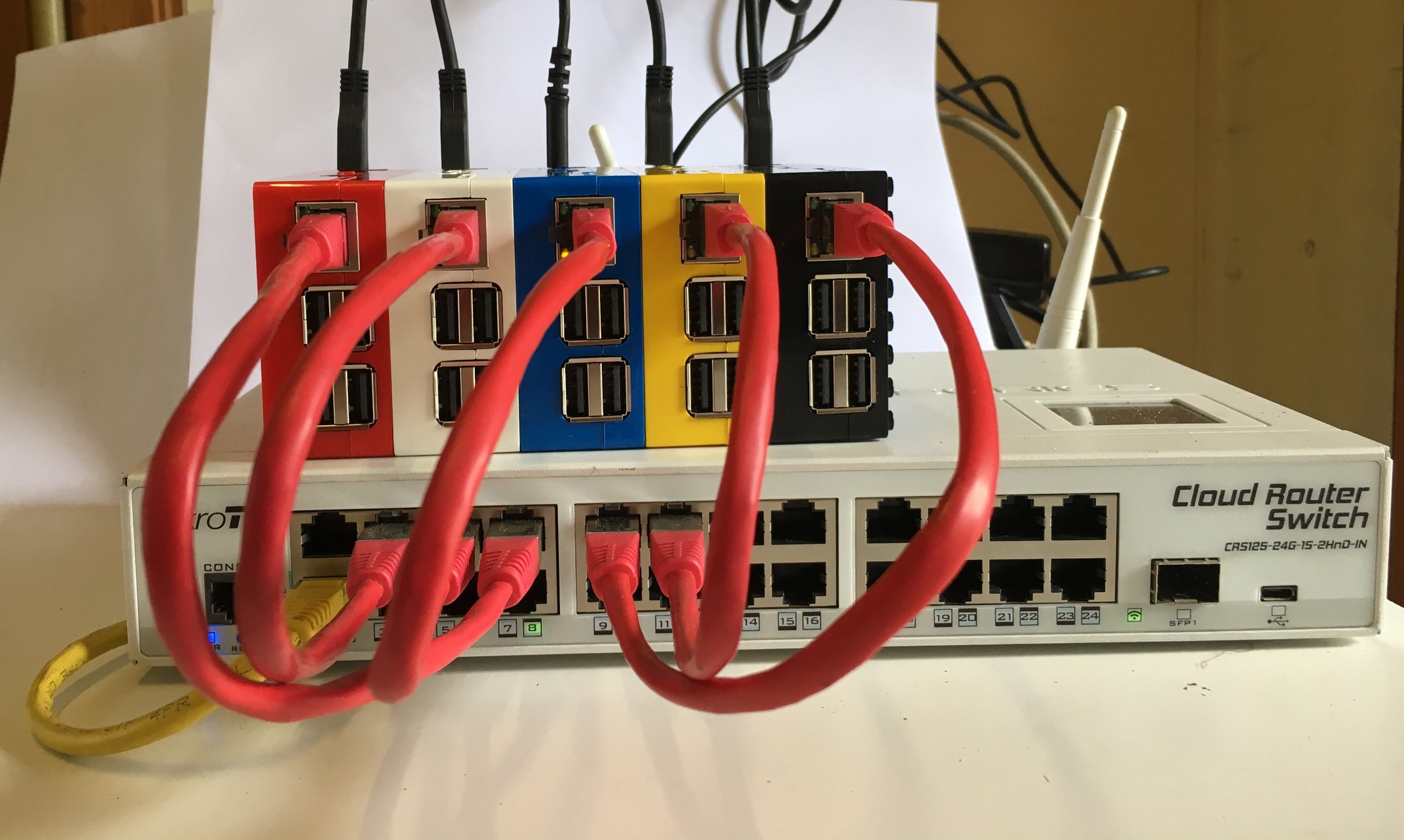

Matériel

Le matériel utilisé ici est le suivant.

- 5 X Rpi 3 B+ (4 x BCM2837 @ 1.4GHz, Dual-band 2.4GHz & 5GHz 802.11b/g/n/ac, Gigabit Ethernet (max. 300Mbit) via USB 2.0, BLE 4.2)

- 5 X Transcend 8GB microSD Ultimate 600x Class 10 UHS-I + Adapter - MLC - 90MB/s

- 1 X Mikrotik CRS125-24G-1S-2HnD

- 1 X chargeur USB, minimum 5 ports

Connectivité

Le stack est caché derrière un routeur et accède à une bonne connexion Internet. Les noeuds obtiennent une adresse IPv4 avec un bail DHCP permanent. Un service DNS local rend correctement l'adresse IPv4 correspondant au nom de chaque noeud.

| Nom | IPv4 | MAC |

|---|---|---|

| passerelle | 192.168.87.1 |

d4:ca:6d:1e:79:4b |

| k8s-master | 192.168.87.38 |

b8:27:eb:a3:9e:7a |

| k8s-node1 | 192.168.87.37 |

b8:27:eb:c2:f7:7a |

| k8s-node2 | 192.168.87.36 |

b8:27:eb:81:51:6b |

| k8s-node3 | 192.168.87.34 |

b8:27:eb:99:fd:4a |

| k8s-node4 | 192.168.87.35 |

b8:27:eb:f9:6f:e9 |

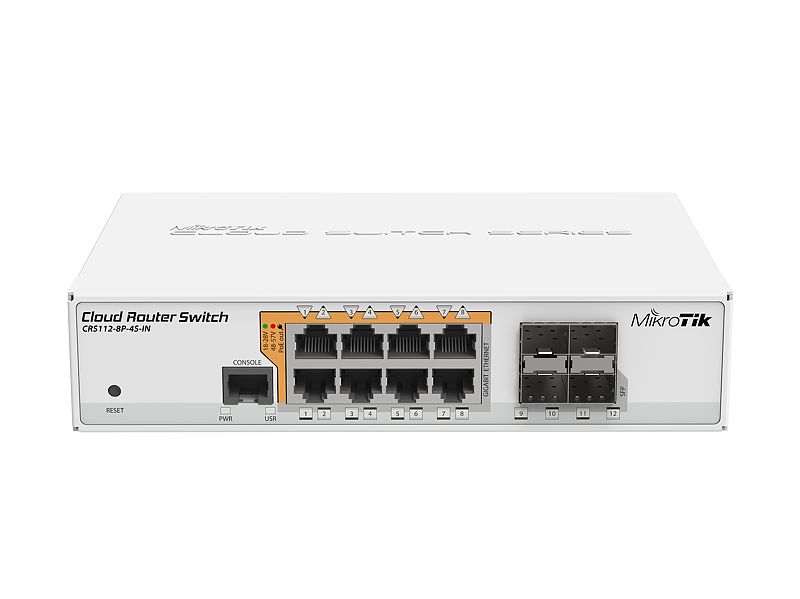

Budget HTVA

On trouvera ici un idée du coût des box Raspberry Pi B+ complètes, de l'alimentation et du réseau. Un boîter de type Lego permet d'empiler ou de désagréger les Rpi facilement. Pour l'alimentation, on conseillera un chargeur un USB multi-ports ainsi que les câbles USB appropriés. En matière de connectivité, n'importe quel routeur/switch peut faire l'affaire. Le choix ici se porte sur du matériel Mikrotik.

| Matériel | PU HTVA | Quantité | Total |

|---|---|---|---|

| Raspberry pi 3 B+ | 32,19 EUR | 5 | 160,95 EUR |

| SD Card 8G | 7,40 EUR | 5 | 37 EUR |

| Boîtiers Lego | 5,74 EUR | 5 | 28,70 EUR |

| Anker PowerPort 10 Chargeur 60W 10 Ports USB | 19,99 EUR | 1 | 19,99 EUR |

| MicroUSB Cable USB-A to Micro-B - 1 meter | 2,44 EUR | 5 | 12,2 EUR |

| CRS112-8G-4S-IN | 104,85 EUR | 1 | 104,85 EUR |

| Total | - | - | 363,69 EUR |

Le réseau pourrait se combiner avec l'alimentation en plaçant un switch/routeur PoE et des modules PoE pour Raspberry Pi. Cette solution serait-elle plus élégante ? La carte ajoutée est-elle compatible avec le boîtier (place, évacuation de la chaleur, ...) ?

| Matériel | PU HTVA | Quantité | Total |

|---|---|---|---|

| Raspberry pi 3 B+ | 32,19 EUR | 5 | 160,95 EUR |

| SD Card 8G | 7,40 EUR | 5 | 37 EUR |

| Boîtiers Lego | 5,74 EUR | 5 | 28,70 EUR |

| Mikrotik CRS112-8P-4S-IN | 131,61 EUR | 1 | 131,61 EUR |

| Power over Ethernet (PoE) HAT for RPi 3B+ | 18,97 EUR | 5 | 94,85 EUR |

| CAT6 networkcable - Black - 1m | 2,44 EUR | 5 | 12,2 EUR |

| Total | - | - | 465,31 EUR |

La différence de prix entre un Lab sans alimentation intégrée PoE et un Lab avec une alimentation PoE est de 101,62 EUR (+28%).

2. Récupération de la dernière image Hypriot OS

https://blog.hypriot.com/downloads/

wget --no-check-certificate https://github.com/hypriot/image-builder-rpi/releases/download/v1.10.0/hypriotos-rpi-v1.10.0.img.zip

wget --no-check-certificate https://github.com/hypriot/image-builder-rpi/releases/download/v1.10.0/hypriotos-rpi-v1.10.0.img.zip.sha256

sha256sum -c hypriotos-rpi-v1.10.0.img.zip.sha256 && unzip hypriotos-rpi-v1.10.0.img.zip

3. Construction des images

Il est aussi nécessaire de récupérer le binaire de l'utilitaire "flash".

flash -u master-user-data hypriotos-rpi-v1.10.0.img

Illustration des opérations :

Is /dev/disk2 correct? yes

Unmounting /dev/disk2 ...

Unmount of all volumes on disk2 was successful

Unmount of all volumes on disk2 was successful

Flashing hypriotos-rpi-v1.10.0.img to /dev/rdisk2 ...

1000MiB 0:00:38 [25.8MiB/s] [======================================================================>] 100%

0+16000 records in

0+16000 records out

1048576000 bytes transferred in 38.755888 secs (27055915 bytes/sec)

Mounting Disk

Mounting /dev/disk2 to customize...

Copying cloud-init master-user-data to /Volumes/HypriotOS/user-data ...

Unmounting /dev/disk2 ...

"disk2" ejected.

Finished.

Fichier master-user-data

#cloud-config

# vim: syntax=yaml

#

# The current version of cloud-init in the Hypriot rpi-64 is 0.7.6

# When dealing with cloud-init, it is SUPER important to know the version

# I have wasted many hours creating servers to find out the module I was trying to use wasn't in the cloud-init version I had

# Documentation: http://cloudinit.readthedocs.io/en/0.7.9/index.html

# Set your hostname here, the manage_etc_hosts will update the hosts file entries as well

hostname: k8s-master

manage_etc_hosts: true

# You could modify this for your own user information

users:

- name: pirate

gecos: "pirate user"

sudo: ALL=(ALL) NOPASSWD:ALL

shell: /bin/bash

groups: users,docker,video,input

plain_text_passwd: testtest

lock_passwd: false

ssh_pwauth: true

chpasswd: { expire: false }

# # Set the locale of the system

locale: "en_US.UTF-8"

# # Set the timezone

# # Value of 'timezone' must exist in /usr/share/zoneinfo

# timezone: "Europe/Paris"

# # Update apt packages on first boot

# package_update: true

# package_upgrade: true

# package_reboot_if_required: true

package_upgrade: false

# # Install any additional apt packages you need here

# packages:

# - ntp

# # WiFi connect to HotSpot

# # - use `wpa_passphrase SSID PASSWORD` to encrypt the psk

#write_files:

# - content: |

# allow-hotplug wlan0

# iface wlan0 inet dhcp

# wpa-conf /etc/wpa_supplicant/wpa_supplicant.conf

# iface default inet dhcp

# path: /etc/network/interfaces.d/wlan0

# - content: |

# country=fr

# ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

# update_config=1

# network={

# ssid="k8s-pi"

# psk=perruche

# }

# path: /etc/wpa_supplicant/wpa_supplicant.conf

# These commands will be ran once on first boot only

runcmd:

# Pickup the hostname changes

- 'systemctl restart avahi-daemon'

# # Activate WiFi interface

# - 'ifup wlan0'

Fichier node1-user-data

#cloud-config

# vim: syntax=yaml

#

# The current version of cloud-init in the Hypriot rpi-64 is 0.7.6

# When dealing with cloud-init, it is SUPER important to know the version

# I have wasted many hours creating servers to find out the module I was trying to use wasn't in the cloud-init version I had

# Documentation: http://cloudinit.readthedocs.io/en/0.7.9/index.html

# Set your hostname here, the manage_etc_hosts will update the hosts file entries as well

hostname: k8s-node1

manage_etc_hosts: true

# You could modify this for your own user information

users:

- name: pirate

gecos: "pirate user"

sudo: ALL=(ALL) NOPASSWD:ALL

shell: /bin/bash

groups: users,docker,video,input

plain_text_passwd: testtest

lock_passwd: false

ssh_pwauth: true

chpasswd: { expire: false }

# # Set the locale of the system

locale: "en_US.UTF-8"

# # Set the timezone

# # Value of 'timezone' must exist in /usr/share/zoneinfo

# timezone: "Europe/Paris"

# # Update apt packages on first boot

# package_update: true

# package_upgrade: true

# package_reboot_if_required: true

package_upgrade: false

# # Install any additional apt packages you need here

# packages:

# - ntp

# # WiFi connect to HotSpot

# # - use `wpa_passphrase SSID PASSWORD` to encrypt the psk

#write_files:

# - content: |

# allow-hotplug wlan0

# iface wlan0 inet dhcp

# wpa-conf /etc/wpa_supplicant/wpa_supplicant.conf

# iface default inet dhcp

# path: /etc/network/interfaces.d/wlan0

# - content: |

# country=fr

# ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

# update_config=1

# network={

# ssid="k8s-pi"

# psk=perruche

# }

# path: /etc/wpa_supplicant/wpa_supplicant.conf

# These commands will be ran once on first boot only

runcmd:

# Pickup the hostname changes

- 'systemctl restart avahi-daemon'

# # Activate WiFi interface

# - 'ifup wlan0'

4. Master node

4.1. Installation de kubeadm.

sudo su -

sudo apt update && sudo apt -y install dirmngr && \

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 6A030B21BA07F4FB

curl -s https://packagecloud.io/install/repositories/Hypriot/rpi/script.deb.sh | sudo bash

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

apt-get update && apt-get install -y kubelet kubeadm kubectl

4.4. Téléchargement des images

kubeadm config images pull -v3

Sortie :

I0427 15:52:58.829608 3378 initconfiguration.go:105] detected and using CRI socket: /var/run/dockershim.sock

I0427 15:52:58.832413 3378 version.go:171] fetching Kubernetes version from URL: https://dl.k8s.io/release/stable-1.txt

I0427 15:53:00.388070 3378 feature_gate.go:226] feature gates: &{map[]}

[config/images] Pulled k8s.gcr.io/kube-apiserver:v1.14.1

[config/images] Pulled k8s.gcr.io/kube-controller-manager:v1.14.1

[config/images] Pulled k8s.gcr.io/kube-scheduler:v1.14.1

[config/images] Pulled k8s.gcr.io/kube-proxy:v1.14.1

[config/images] Pulled k8s.gcr.io/pause:3.1

[config/images] Pulled k8s.gcr.io/etcd:3.3.10

[config/images] Pulled k8s.gcr.io/coredns:1.3.1

4.3. Fichier de service kubelet.service.d/10-kubeadm.conf

Dans le contexte logiciel décrit dans ce document, contrairement à certaines recommendations, le fichier de service de service de kubeadm doit comporter la variable d'environnement KUBELET_NETWORK_ARGS dans la valeur de la clé ExecStart.

Sans cette valeur, une erreur intervient lors de l'initialisation du control-plane : NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized.

Le fichier /etc/systemd/system/kubelet.service.d/10-kubeadm.conf devrait ressembler à ceci.

vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/default/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS $KUBELET_NETWORK_ARGS

4.4. Initialisation du control plane.

MASTER_IP="192.168.87.44"

sudo kubeadm init \

--pod-network-cidr 10.244.0.0/16 \

--apiserver-advertise-address=${MASTER_IP} \

--token-ttl=0 \

--ignore-preflight-errors=ALL

We pass in --token-ttl=0 so that the token never expires - do not use this setting in production. The UX for kubeadm means it's currently very hard to get a join token later on after the initial token has expired.

Sortie :

[init] Using Kubernetes version: v1.14.1

[preflight] Running pre-flight checks

[WARNING Port-6443]: Port 6443 is in use

[WARNING Port-10251]: Port 10251 is in use

[WARNING Port-10252]: Port 10252 is in use

[WARNING FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[WARNING FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[WARNING FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[WARNING FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Port-10250]: Port 10250 is in use

[WARNING Port-2379]: Port 2379 is in use

[WARNING Port-2380]: Port 2380 is in use

[WARNING DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Using existing etcd/ca certificate authority

[certs] Using existing etcd/server certificate and key on disk

[certs] Using existing etcd/healthcheck-client certificate and key on disk

[certs] Using existing apiserver-etcd-client certificate and key on disk

[certs] Using existing etcd/peer certificate and key on disk

[certs] Using existing front-proxy-ca certificate authority

[certs] Using existing front-proxy-client certificate and key on disk

[certs] Using existing ca certificate authority

[certs] Using existing apiserver-kubelet-client certificate and key on disk

[certs] Using existing apiserver certificate and key on disk

[certs] Using the existing "sa" key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/scheduler.conf"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 0.404265 seconds

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --experimental-upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: zaqygk.evoezgtonfjxb248

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bookubeadm join 192.168.87.38:6443 --token zaqygk.evoezgtonfjxb248 \

tstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

4.5. Résultat à noter

kubeadm join 192.168.87.44:6443 \

--token 9xzqfp.4o0bz4wozno3re84 \

--discovery-token-ca-cert-hash sha256:a106030e7a42ba6170f99b861f8695b447844cda5e97903e1ce35ef2cdd9599f

4.6. Environnement

exit

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chown $(id -u):$(id -g) /etc/kubernetes/kubelet.conf

export KUBECONFIG=/etc/kubernetes/kubelet.conf

Vérification

kubectl get pods --namespace=kube-system

5. Worker nodes

Sur chaque Worker node : k8s-node1, k8s-node2, k8s-node3, k8s-node4, ..

Se connecter en tant que Root :

sudo su -

Installer kubelet, kubeadm, et kubectl :

sudo apt update && sudo apt -y install dirmngr && \

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 6A030B21BA07F4FB

curl -s https://packagecloud.io/install/repositories/Hypriot/rpi/script.deb.sh | sudo bash

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

apt-get update && apt-get install -y kubelet kubeadm kubectl

Joindre le cluster :

kubeadm join 192.168.87.44:6443 \

--token 9xzqfp.4o0bz4wozno3re84 \

--discovery-token-ca-cert-hash sha256:a106030e7a42ba6170f99b861f8695b447844cda5e97903e1ce35ef2cdd9599f

Résultat :

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Vérifier les noeuds sur le master :

kubectl get nodes

6. Réseau Flannel

Flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Vérification

kubectl get services --namespace=kube-system

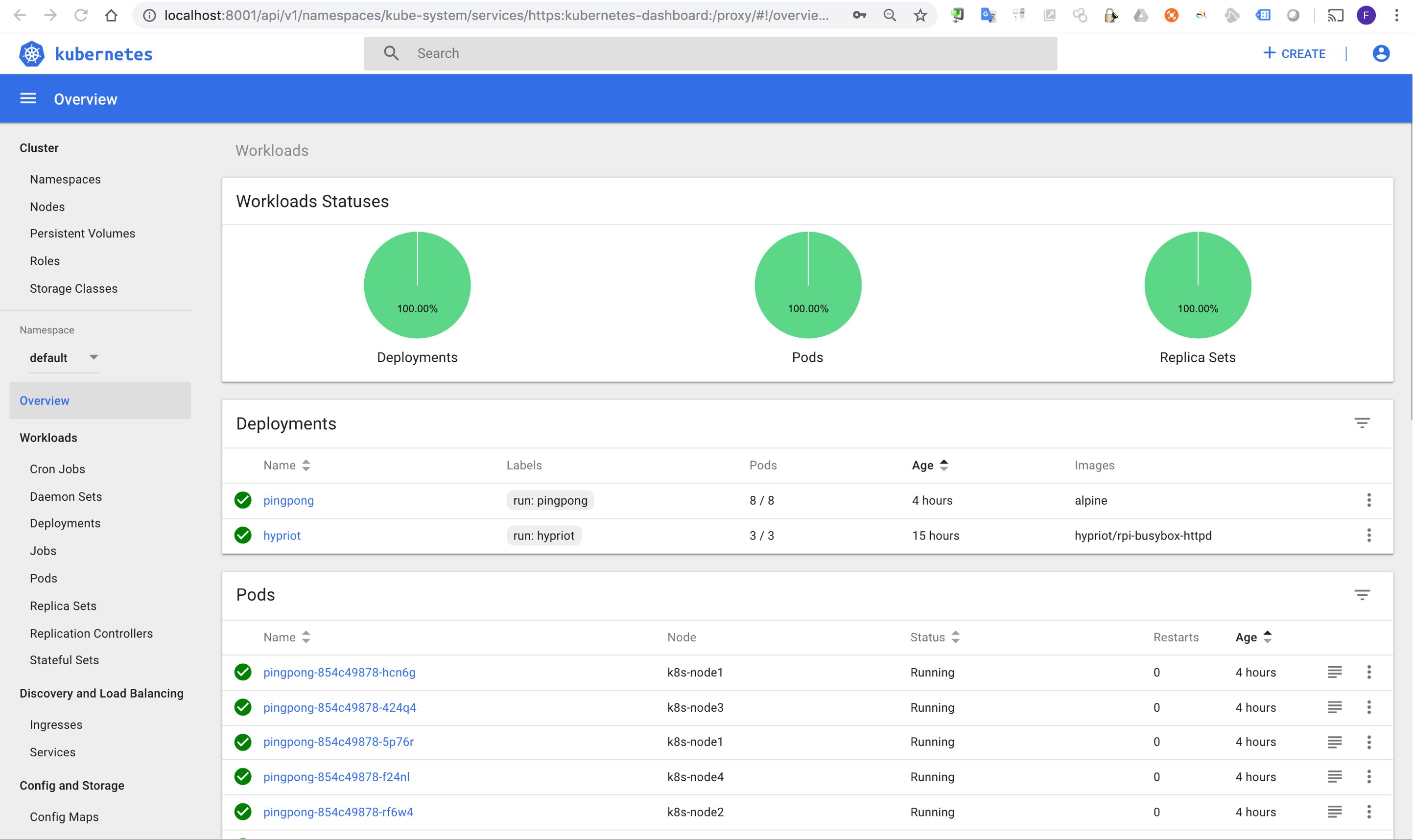

7. Dashboard

https://github.com/kubernetes/dashboard/tree/v1.10.0/src/deploy

kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.0/src/deploy/recommended/kubernetes-dashboard-arm.yaml

kubectl create serviceaccount dashboard -n default

kubectl create clusterrolebinding dashboard-admin -n default --clusterrole=cluster-admin --serviceaccount=default:dashboard

kubectl get secret $(kubectl get serviceaccount dashboard -o jsonpath="{.secrets[0].name}") -o jsonpath="{.data.token}" | base64 --decode

kubectl proxy &

Vérification

kubectl get pods --all-namespaces

kubectl get secret,sa,role,rolebinding,services,deployments --namespace=kube-system | grep dashboard

kubectl get svc --namespace kube-system

Désinstallation du Dashboard

kubectl delete deployment kubernetes-dashboard --namespace=kube-system

kubectl delete service kubernetes-dashboard --namespace=kube-system

kubectl delete role kubernetes-dashboard-minimal --namespace=kube-system

kubectl delete rolebinding kubernetes-dashboard-minimal --namespace=kube-system

kubectl delete sa kubernetes-dashboard --namespace=kube-system

kubectl delete secret kubernetes-dashboard-certs --namespace=kube-system

kubectl delete secret kubernetes-dashboard-key-holder --namespace=kube-system

8. Désinstallation du control plane.

sudo apt-get -y remove --purge kubeadm kubectl kubelet && sudo apt-get autoremove -y --purge

sudo rm -rf /var/lib/etcd /var/lib/kubelet /etc/kubernetes /etc/cni

docker stop $(docker ps | awk '{print $1}')

docker rm $(docker ps -a | awk '{print $1}')

docker rmi $(docker images | awk '{print $3}')

9. Liens

https://gist.github.com/goffinet/29d981a02409fee294e91a0e951bd6b8

https://blog.hypriot.com/downloads/

https://blog.hypriot.com/post/setup-kubernetes-raspberry-pi-cluster/

https://docs.traefik.io/#the-traefik-quickstart-using-docker

https://kubernetes.io/docs/setup/independent/install-kubeadm/

https://gist.github.com/alexellis/fdbc90de7691a1b9edb545c17da2d975

https://blog.alexellis.io/build-your-own-bare-metal-arm-cluster/

https://packagecloud.io/Hypriot/rpi/install

https://maxrichter.github.io/Kubernetes/

https://vim.moe/blog/build-a-kubernetes-cluster-with-raspberry-pis/

https://www.ecliptik.com/Raspberry-Pi-Kubernetes-Cluster/

https://github.com/kubernetes/dashboard

https://github.com/kubernetes/dashboard/tree/v1.10.0/src/deploy